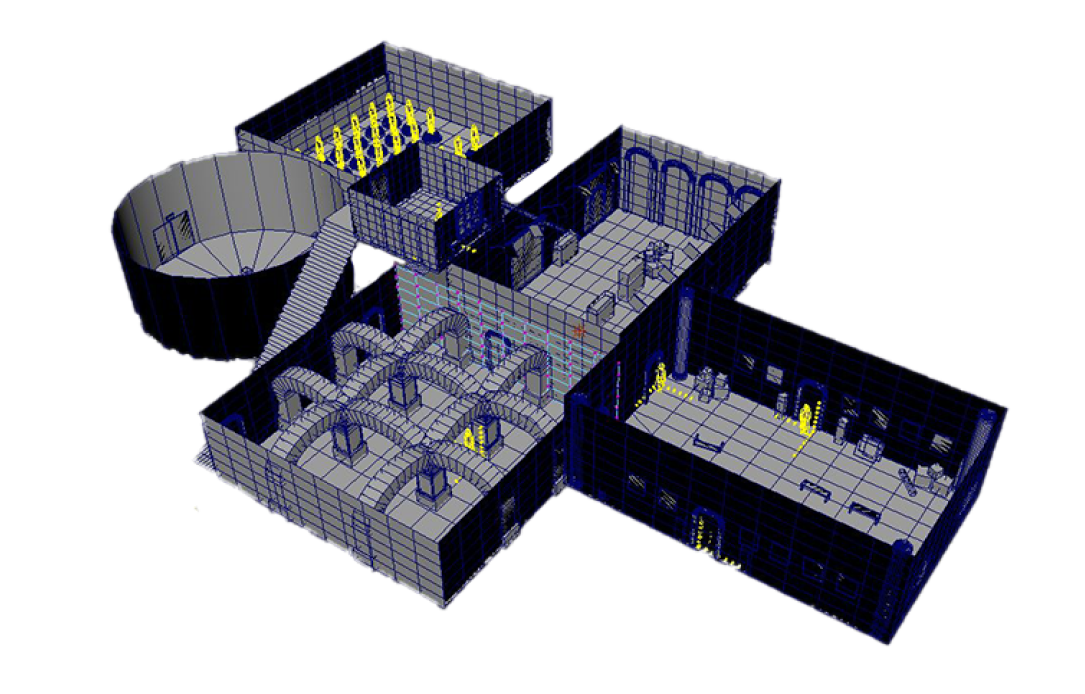

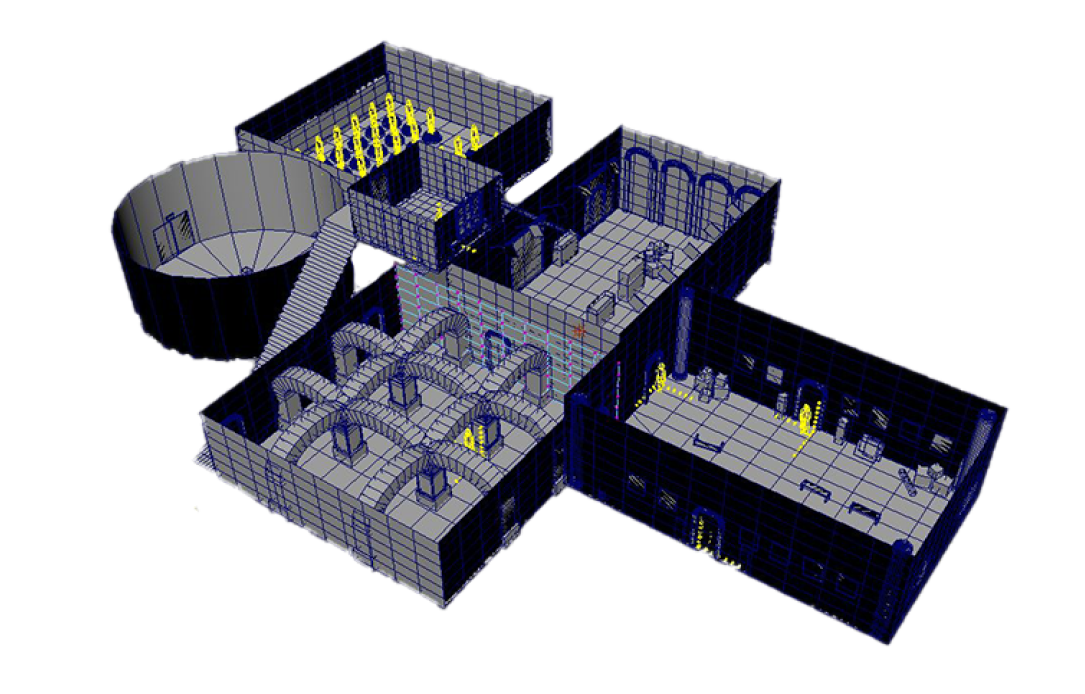

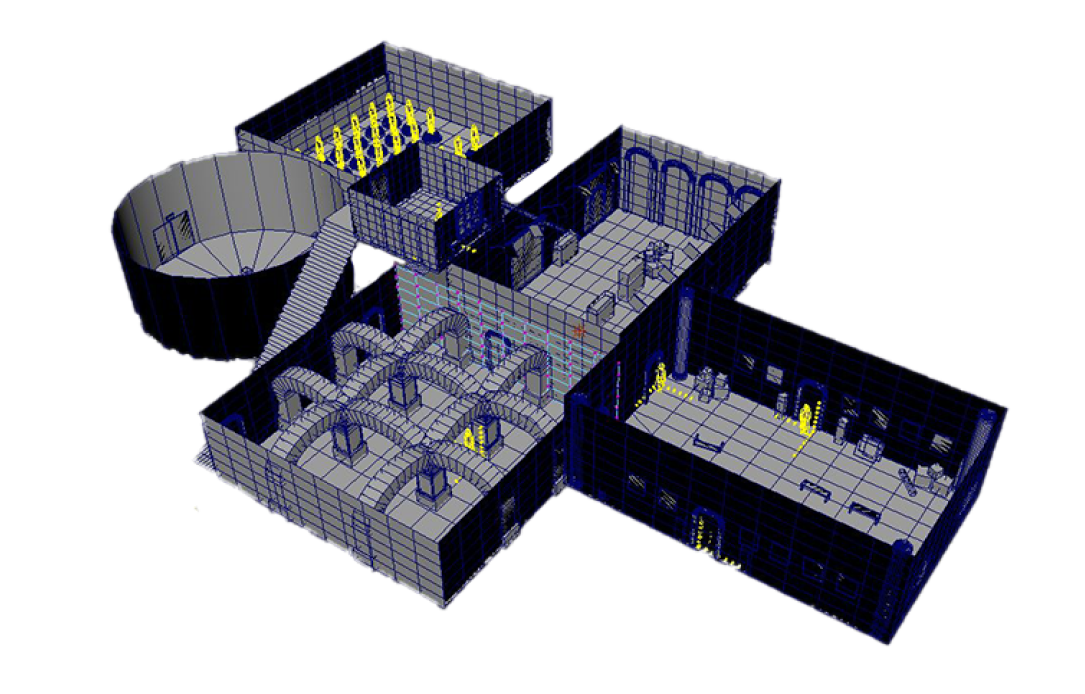

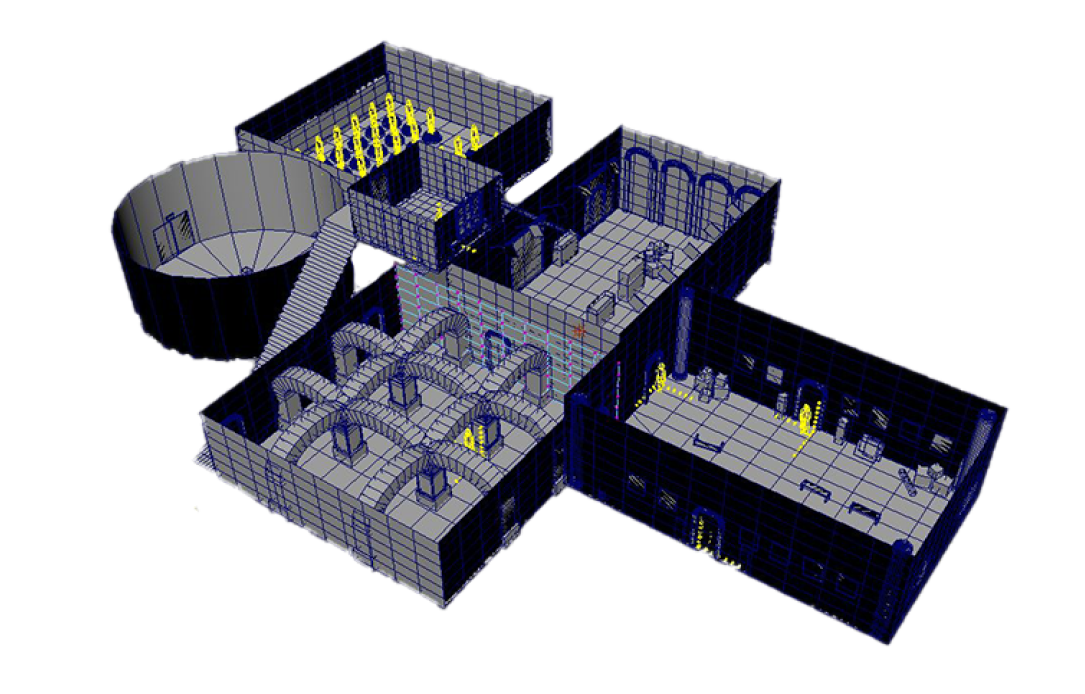

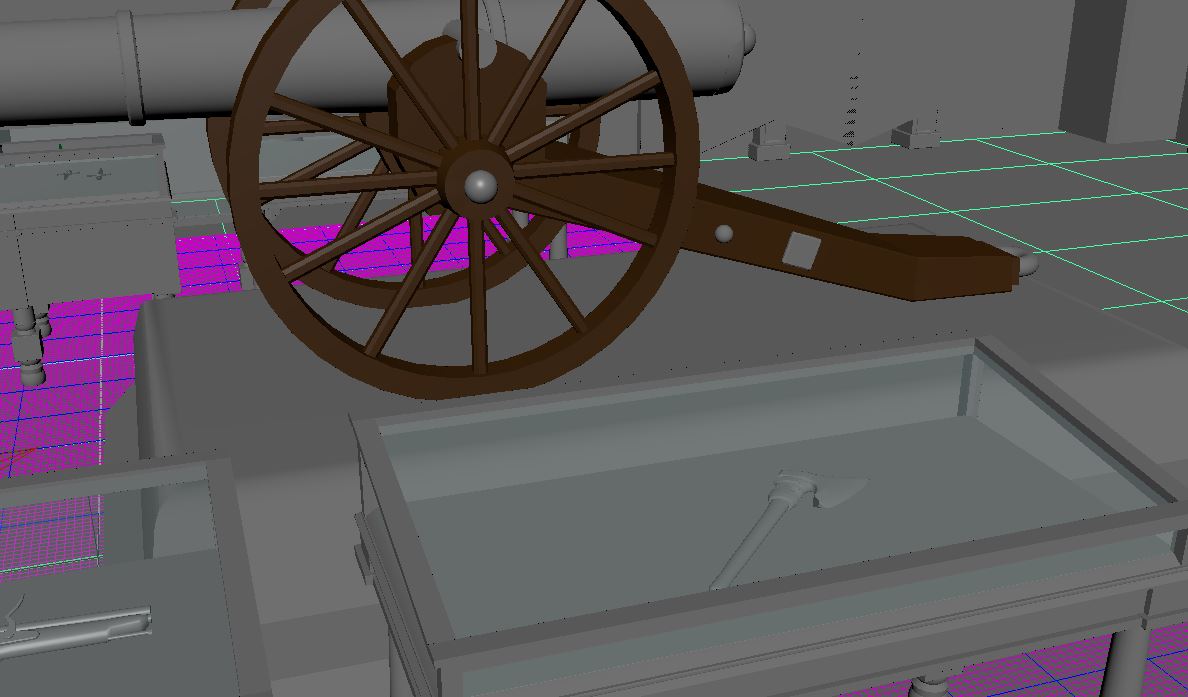

I modeled many assets for the final design of the project including the entirety of the ‘medieval room’ as well as many artifacts, containers, display cases and other props throughout the museum. I began by finding reference images to use as I modeled so that my assets would be more realistic. After creating an initial shape, I would refine the model by adding vertices and sculpting. Finally, I would place individual assets within the larger scene. In addition to modeling I created many textures and UV maps for our assets. The group exported our models into Unity where we created lighting effects and sound effects to make the space more immersive.